Why do some Shopify tests seem to confirm what we already suspect? Have you ever paused a test the moment it looked like your idea was working? If these questions make you smile or raise an eyebrow, then you’re in the right place. By reading this article, you’ll discover how confirmation bias can sneak into your Shopify A/B testing process and steer your conclusions in unhelpful directions. You’ll also learn straightforward methods to keep your results honest, data-driven, and truly helpful. Ready to see how it all fits together? Let’s jump right in.

Understanding Confirmation Bias in E-commerce Testing

In this section, we’ll explore what confirmation bias is and why it matters in the world of Shopify A/B testing. We’ll look at how it shows up, why it can hurt your business, and how you can spot it early. By the end of this section, you’ll see that a small bias can cause big problems. Then we’ll move on to see how it often appears in everyday testing.

Defining Confirmation Bias in the Context of A/B Testing

Confirmation bias is the habit of looking for and giving more weight to information that supports our existing beliefs. In the realm of A/B testing, it can mean ignoring data that doesn’t fit our predictions. Instead of letting the numbers lead us, we focus on the details that back our hunch. When this happens, even small errors can add up to big misguided decisions.

We’ve defined the issue. Next, let’s see the deep reasons behind it and understand why our minds often push us to see only what we want to see.

The Psychology Behind Confirmation Bias in Data Analysis

Our brains are wired to avoid conflict. We often prefer simple stories where everything lines up with our first guess. This desire to be right can overshadow new facts and lead us to interpret data in a way that feels comfortable. In A/B testing, this might show up as cherry-picking numbers that match what we expected or ignoring signals that point in a different direction.

So, we know the mental side of confirmation bias. Let’s zoom in on how it happens specifically with Shopify store optimization.

How Confirmation Bias Manifests in Shopify Store Optimization

Imagine you’re testing a new product page layout. You think a bigger “Add to Cart” button will increase clicks. Halfway through the test, you see a small improvement, and you decide that’s enough proof. You might stop the test early because you want your idea to be right. This is exactly how bias creeps in. It can happen with new features, shipping offers, email campaigns, or anything else you decide to test.

Next, we’ll see why even small leaps to conclusions can lead to big business problems.

The Business Impact of Biased Testing Decisions

When we trust biased results, we invest time and money in ideas that may not actually boost sales. Worse, we might ignore better opportunities because we’ve convinced ourselves we already have the answer. Over time, these decisions add hidden costs and lead to disappointment when real sales don’t match our inflated hopes. Clear data, free from bias, is the backbone of good decision-making.

We’ve discovered what confirmation bias is and why it matters. Ready to see it in action? In the next section, we’ll look at some common ways this bias appears in Shopify testing.

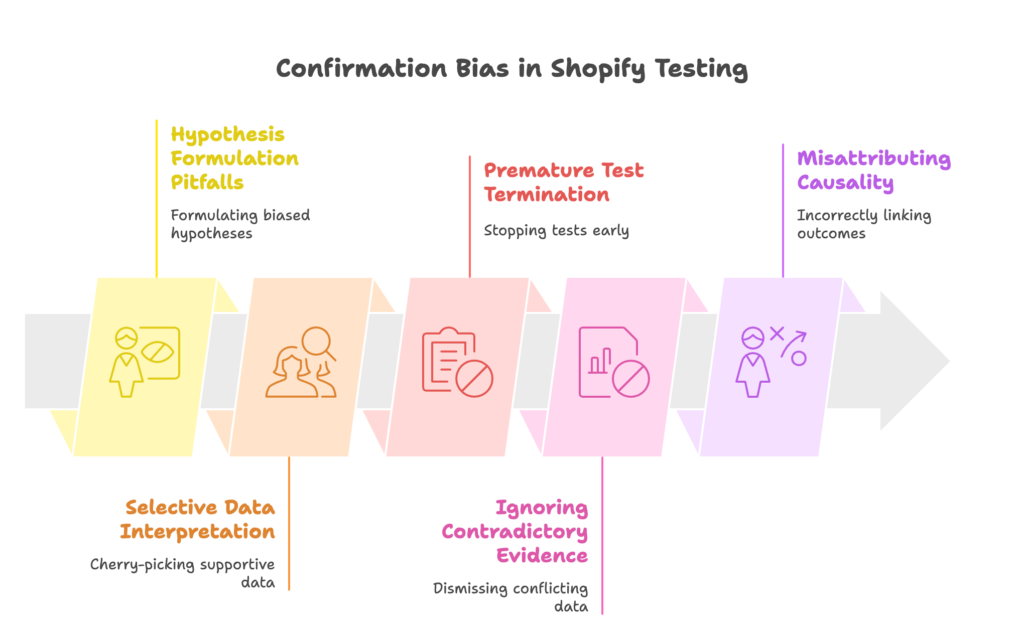

Common Manifestations of Confirmation Bias in Shopify Testing

Here, we’ll identify where bias pops up the most. We’ll look at how we form hypotheses, pick data to fit our story, end tests too soon, and much more. By the end, you’ll recognize these patterns and know what to watch for. Then we’ll move on to a more scientific way of testing that prevents such pitfalls.

Hypothesis Formulation Pitfalls

Many people start with a test idea they already believe in. When we do this, we often phrase a hypothesis in a way that almost guarantees the outcome we expect. For example, writing a hypothesis like, “A bigger checkout button will increase conversions” already signals that we’re seeking proof of a positive outcome instead of exploring both possibilities.

Forming better hypotheses is just one step. Next, we’ll see how selective data interpretation also creeps in.

Selective Data Interpretation and Cherry-Picking

Selective data reading means focusing on the numbers that back up our hopes. If we get a few encouraging metrics, we may spotlight them while ignoring less friendly figures. This approach leads to a skewed view of the test outcome, making it easy to miss the full story hidden in the rest of the data.

That’s not the only misstep, though. Let’s look at another common trap: stopping a test as soon as we see what we want.

Premature Test Termination Based on Expected Results

Cutting a test short is tempting when early data points look good. However, small sample sizes can exaggerate or hide real effects. Stopping early might lock in a choice that only seemed right during a brief lucky streak. Over time, this can steer us toward less profitable decisions.

But bias doesn’t end there. Sometimes we ignore evidence that doesn’t match our wishes. Let’s see how that happens.

Ignoring Contradictory Evidence in Test Results

When a test result conflicts with what we hoped, we might dismiss it as a fluke. By waving away contradictory evidence, we never learn from the data that could guide us to better strategies. This is one of the clearest signs of confirmation bias at work.

Next, we’ll look at another way bias misleads us: when we explain wins or losses in the wrong way.

Misattributing Causality in Successful Tests

Sometimes, a test shows a positive change, and we assume it was our idea alone that made it happen. Yet, many other factors—like seasonal traffic changes—could be influencing the results. Giving full credit to the test variation, without ruling out other influences, can lead us to repeat mistakes in the future.

We’ve just looked at several ways confirmation bias appears in testing. Now it’s time to learn a scientific approach that sets a firmer foundation.

The Scientific Approach to A/B Testing on Shopify

This section explores how to test in a more impartial way. We’ll talk about objective experimentation, statistical vs. practical significance, how to decide on sample size, and how to control for outside factors. By the end, you’ll have a clear blueprint for unbiased testing. Then, we’ll dive deeper into protective measures against bias.

Principles of Objective Experimentation

An impartial test starts by asking a clear, neutral question. Instead of “Will this new design make us more money?” you might ask, “How will this new design affect sales?” Words matter because they keep your mind open to all outcomes. You also need a plan that lets the test run its course, regardless of early data spikes.

Once you design an objective test, you still need to look at your results in the right way. Let’s compare statistical significance with practical significance next.

Statistical Significance vs. Practical Significance

Statistical significance tells you if an effect is likely real, rather than random chance. Practical significance, however, asks if that effect is large enough to make a real difference in your store’s performance. A test might find a tiny increase that is statistically reliable but too small to be worth your effort in the long run.

Now we’ll see why sample size and testing duration play a big role in getting solid results.

Sample Size Determination and Testing Duration

Without enough data points, you risk false positives or negatives. It’s similar to flipping a coin a few times and believing a lucky streak is a pattern. A well-calculated sample size and a set testing duration give you the confidence that your numbers truly reflect reality. It’s about giving your test enough time to gather reliable data.

Of course, you’ll also want to control for outside elements that might skew your data. Let’s see how to do that next.

Controlling for External Variables in Shopify Tests

External factors might include holidays, sudden price changes by competitors, or unexpected promotions. To reduce their effect, it’s best to schedule tests during typical traffic periods and keep other variables constant. If something big happens during the test, extend the test period or make a note so you don’t read too much into the final numbers.

Once you have a consistent approach, it helps to build a culture around testing. Let’s talk about that next.

Building a Culture of Empirical Testing

A team-wide habit of letting data lead the way is key. This means sharing results, encouraging questions, and rewarding curiosity over quick wins. When everyone believes in gathering facts before deciding, you reduce the odds that any single person’s bias takes over.

We’ve covered the fundamentals of a neutral testing process. Next, we’ll see what structures you can put in place to protect against bias.

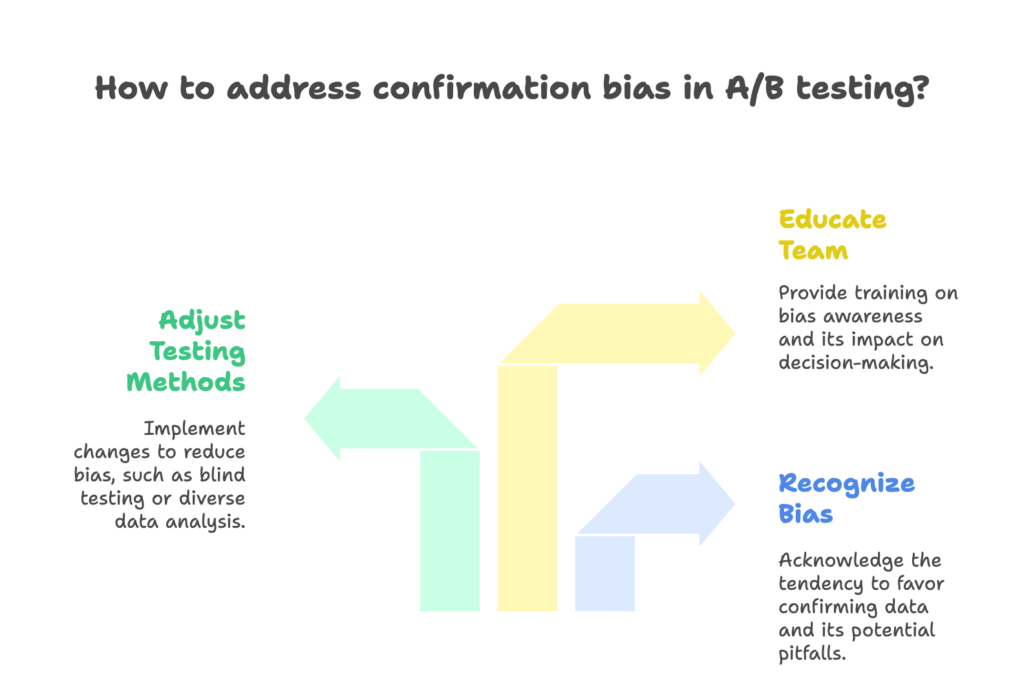

Structural Safeguards Against Confirmation Bias

This section will look at specific tools and frameworks that keep your tests unbiased. We’ll explore question-based testing, blind analysis, multi-stakeholder reviews, and more. By the end, you’ll know how to set up a testing program designed to spot bias before it sets in. Then we’ll see the practical steps for unbiased Shopify testing.

Question-Based Testing Frameworks vs. Hypothesis-Based Testing

A question-based framework starts by asking, “What if this design reduces cart abandonment?” instead of asserting, “Our new design will reduce cart abandonment.” The shift in wording sets a more open tone, which makes it easier to accept a range of outcomes rather than hunting for proof of a single idea.

Next, we’ll see how blind analysis can further remove personal bias from your tests.

Implementing Blind Analysis Techniques

In a blind setup, the person reviewing the data doesn’t know which variation is which. This prevents subconscious favoritism because you can’t skew your reading toward the variation you hope is winning. It’s a simple trick that can save you from big mistakes.

Still, it’s not just about the data. Bringing more people into the process can also help.

Multi-Stakeholder Review Processes

When multiple departments weigh in on test results, different points of view can reveal weak spots in your assumptions. A marketer might see a potential flaw in messaging, while a developer might spot a technical factor that influenced user behavior. These varied insights keep you from running with one narrow interpretation.

Pre-registering test details is another strong safeguard. Let’s see how it works.

Pre-Registration of Test Parameters and Success Metrics

Before you run a test, write down the metrics you’ll track and the length of time it will run. This practice stops you from changing your measurements midway just to make the results look better. It also helps others follow the logic of your test from start to finish.

Finally, open documentation helps keep everyone honest. Let’s explore that next.

Documentation and Transparency Protocols

Make test plans, metrics, and results accessible to your team. Document every phase, including any hiccups along the way. By keeping things transparent, you limit the chance of massaging the data to align with a desired outcome. Team members can always revisit the record to confirm the real story.

You now know how to set up systems that guard against bias. Next, let’s learn how to apply these methods technically within Shopify.

Technical Implementation of Unbiased Testing on Shopify

In this section, we’ll focus on the tools and setups that help you gather data without bias. We’ll also look at limits of the built-in Shopify features and how to extend them. By the end, you’ll know how to collect results you can trust. Then we’ll jump into how teams can train themselves to resist bias.

Shopify A/B Testing Tools and Their Limitations

Shopify offers basic analytics, but many store owners pair it with external apps or analytics platforms for deeper insights. While these tools can be helpful, you must set them up properly. If you only track a small subset of metrics or end your tests at the first sign of good news, the best tools in the world won’t save you from confirmation bias.

Next, let’s talk about how to structure your control and variation groups for clearer results.

Setting Up Proper Control and Variation Groups

Your control group should experience the usual store setup, while your variation group sees the change you want to test. The key is to randomize who goes into each group. Random assignment ensures that external differences among visitors, such as traffic source, are evenly spread across both groups.

However, focusing only on your main conversion metric might be too narrow. Let’s see why more metrics can help.

Comprehensive Metric Selection Beyond Primary KPIs

While you likely care most about sales or sign-ups, secondary metrics—like add-to-cart rate, bounce rate, or page load speed—offer a fuller picture. A test might boost sales short term but also drive up returns or complaints. Looking at multiple signals can alert you to effects the main metric alone could miss.

Broad metrics aren’t always enough. Segmenting your audience can provide extra clarity, which we’ll discuss next.

Segmentation Analysis to Avoid Overgeneralization

By breaking down results by device type, region, or traffic source, you might find that a variation works well for mobile users but not for desktop visitors. Without segmentation, you might assume a change helps everyone equally, which could lead to less targeted strategies. Analyzing segments prevents blanket conclusions.

Sometimes, you might still want a second opinion. That’s where third-party analytics come in.

Integration with Third-Party Analytics for Verification

Using a second tool to verify your main results keeps you honest. If the extra data lines up with your primary source, you can be more confident in your findings. If it disagrees, it may mean you need more research before taking action.

We’ve talked about the technical side. Next, let’s see how to train your mind and your team to resist bias.

Cognitive Debiasing Techniques for Testing Teams

This section is about the human element. We’ll cover metacognitive awareness, a devil’s advocate approach, and other ways to keep your mind open. You’ll learn how to consider different explanations, separate test design from results, and handle negative outcomes without fear. Then we’ll explore real stories of how bias can derail tests.

Metacognitive Awareness Training for Analysts

Metacognition means thinking about how we think. If analysts know that they’re prone to seeing what they want to see, they can slow down and test their assumptions. Simple habits like pausing before making a conclusion can make a big difference.

Being aware is a great start, but sometimes you also need direct opposition to your viewpoint. Let’s see how that helps.

Devil’s Advocate Protocols in Test Analysis

Assign someone the job of arguing against the favored interpretation. This forces the team to look at alternative explanations and question whether the data truly supports the initial conclusion. It also encourages healthy debate, which brings hidden flaws into the light.

Next, we’ll see how exploring different possibilities keeps us from clinging to a single story.

Considering Alternative Explanations for Results

Whenever you find a pattern in your data, ask, “What else could explain this?” Maybe a marketing email was sent at the same time, or there was a temporary discount. By identifying all possible causes, you avoid jumping to the first explanation that matches your expectations.

Even with these steps, it’s wise to separate test design from the analysis. Let’s discuss why.

Separating Test Design from Results Analysis

If the person who designed the test also interprets the outcomes, they may be defensive about their idea. Let someone who wasn’t involved in the setup review the data. This separation keeps you from justifying the work you’ve already done.

Finally, it’s important to create a team environment where negative results aren’t scary. Let’s see how to do that next.

Building Psychological Safety for Negative Results

If your team feels anxious about tests that don’t go as planned, they may focus on saving face instead of learning. Encourage a mindset that sees “failures” as sources of insight. When it’s safe to be wrong, you’re less likely to bend the data to fit your hopes.

You’ve got the tools and the mindset. Now let’s look at real-world stories to see how bias creeps in and how we can fix it.

Case Studies of Confirmation Bias in Shopify Testing

In this section, we’ll visit several actual or relatable scenarios where confirmation bias affected results. You’ll see product page errors, misleading checkout numbers, and more. By the end, you’ll have a clearer sense of how these errors happen and how to correct them. Then we’ll move on to building a forward-looking testing roadmap.

Product Page Optimization Gone Wrong

A store changed its product description layout expecting a higher conversion rate. Early data showed a slight boost, so the team quickly ended the test. Sales later plateaued, and further analysis revealed that most new conversions were from a temporary social media shoutout. The layout change itself wasn’t the cause.

But that’s just one example. Let’s see how tests can mislead us at the checkout stage too.

Checkout Flow Tests with Misleading Results

One Shopify seller tested a shorter checkout flow. Early results looked good, and they ended the test. After more data came in, it turned out the new flow helped one group of customers but confused another, causing them to abandon their carts. By stopping the test early, they missed the fuller story.

Next, let’s see why mobile experience tests can also trick us.

Mobile Experience Optimization Pitfalls

A store made a version of its site tailored for mobile users, hoping for a spike in engagement. The results first appeared strong, and they assumed the design was perfect. But the store never checked desktop users during the same period, who might have had a different reaction or faced unexpected bugs that lowered overall conversions.

We’ll now check out how confirmation bias influences email campaigns.

Email Marketing Tests with Confirmation Bias

When testing subject lines, it’s easy to highlight only positive open rates. If a few big shoppers happened to open one subject line more often, you might think it’s the better one for everyone. In reality, a broader audience might not respond the same way.

Finally, let’s see what we can learn overall.

Lessons Learned and Corrective Approaches

All these pitfalls show that early excitement can blind us to the bigger picture. Testing long enough, broadening our metrics, and being open to “bad news” helps us stay on track. Thorough analysis and cross-checking results can prevent missteps and save resources in the long run.

You’ve now seen some real-life tales of bias. Let’s put this knowledge into practice by creating a testing plan that avoids these traps.

Creating a Testing Roadmap That Minimizes Bias

In this section, we’ll detail how to build a plan for the long haul. We’ll talk about balanced test portfolios, iterative methods, user research, and how to gather knowledge for the future. By the end, you’ll have a roadmap to keep your Shopify testing honest and effective. Then we’ll explore deeper analysis methods for even richer insights.

Long-term Testing Strategy Development

It helps to plan your tests for several weeks or months in advance. Pick a mix of ideas, some big and some small, rather than chasing one “magic” fix. A balanced approach spreads your efforts across different parts of your store, reducing the chance that bias in one area causes widespread issues.

Speaking of balance, let’s see how a test portfolio keeps us fair-minded.

Balanced Test Portfolio Planning

By running multiple tests in different store sections, you avoid obsessing over a single metric or feature. This approach also provides more learning opportunities. If one test fails, others might succeed, keeping morale high. It’s like diversifying your investments so that one misstep doesn’t ruin everything.

Next, let’s look at iterative testing, which helps you adapt based on what you learn.

Iterative Testing Approaches That Challenge Assumptions

Instead of one big overhaul, try smaller updates in stages. Each step can confirm or reject a piece of your overall idea. This approach makes it easier to see which parts truly improve your store and which need rethinking.

But data isn’t everything. Talking to users can guide you, too.

Incorporating User Research to Guide Testing

Surveys, interviews, and direct feedback can reveal pain points that analytics alone might not show. This extra insight helps you design tests that matter to real customers, not just your guesses. When user research leads your ideas, you’re less likely to start with a strong bias.

Finally, storing and referencing all your past tests can help you build on what you’ve learned. Let’s see how that helps.

Building Institutional Knowledge from All Test Results

Keep a shared archive of every test’s goals, setups, outcomes, and any lessons you picked up. Over time, this library forms a clear picture of what works, what doesn’t, and why. It also encourages new team members to continue testing in the same data-focused way.

Now that you have a roadmap, let’s push further into advanced analysis to gain an even deeper grasp of your test results.

Advanced Analysis Techniques for Unbiased Insights

This section covers Bayesian vs. frequentist methods, multivariate testing, looking at trends over time, and using machine learning. By the end, you’ll see how extra tools can make your tests even more accurate. Then we’ll wrap up by looking at the future of objective testing and its ethical side.

Bayesian vs. Frequentist Approaches to A/B Testing

Frequentist testing relies on p-values and confidence intervals, while Bayesian testing updates probabilities as new data arrives. Both can be valid, but if you keep bias in mind, either approach can still go wrong if you peek at data too soon or ignore unexpected patterns. The key is to stay consistent and transparent.

When one change at a time isn’t enough, it might be time to consider multivariate testing. Let’s see how that works.

Multivariate Testing to Understand Complex Interactions

With multivariate testing, you can test multiple elements at the same time. For example, you might try different headlines, images, and button colors together. This reveals how elements interact with each other, giving you a more complete understanding of what’s happening on your store pages.

But some changes only show up over extended periods. Let’s look at that next.

Longitudinal Analysis to Account for Novelty Effects

Sometimes a new design looks amazing at first simply because it’s fresh. Over time, user excitement might fade, and the effect shrinks. Checking results at intervals over a longer period can reveal these patterns. That way, you don’t get fooled by short-lived boosts.

Meanwhile, analyzing users by generation or date can give even more clarity. Let’s see how cohort analysis helps.

Cohort Analysis for Deeper Understanding

By grouping users based on when they started interacting with your site, you can see how patterns shift over time. This helps you spot trends you might miss if you group everyone together. For example, brand-new visitors might behave differently than longtime customers.

Finally, machine learning can help you handle large data sets. Let’s take a quick look at that.

Machine Learning Applications in Unbiased Test Analysis

Machine learning tools can spot hidden trends and group user behaviors automatically. However, these models rely on the data you provide. If your data is biased, machine learning simply automates that bias. Always start with clean, balanced inputs for the best results.

You’ve seen how advanced methods can push your testing beyond simple analyses. Let’s peek at where objective testing is headed in e-commerce as a whole.

The Future of Objective Testing in E-commerce

In this section, we’ll talk about AI-assisted testing, automated bias detection, continuous testing, and the moral side of experimentation. You’ll learn how to stay one step ahead as the world of e-commerce evolves. Then we’ll wrap up with references and a final reminder about Growth Suite. Let’s see what’s coming next.

AI-Assisted Test Design and Analysis

AI can quickly run through different variations and help you identify winning trends sooner. It can also point out unusual data points that might indicate something interesting or suspicious. While AI tools can accelerate testing, they still need human oversight to ask the right questions and interpret results responsibly.

Next, we’ll talk about automated bias detection built into testing workflows.

Automated Bias Detection in Testing Workflows

Some platforms now flag potential bias, such as ending a test too early or ignoring a segment of users. These tools act like a second set of eyes. They won’t remove human judgment, but they can catch mistakes before they become costly.

Continuous testing is also becoming more common. Let’s explore that next.

Continuous Testing Models vs. Discrete A/B Tests

Instead of running one test at a time, some stores constantly test new ideas in small increments. This helps them adjust quickly but also requires a clear system to track everything. While continuous testing offers agility, it can also make it easier for bias to sneak in if you’re not careful with your data and methods.

Along with these new approaches, we must remember the moral side of testing on real shoppers. Let’s touch on that now.

Ethical Considerations in E-commerce Experimentation

Testing must balance business goals with the well-being of users. Repeatedly bombarding visitors with certain tests, for example, can hurt user trust. Make sure to keep user experience in mind when designing experiments. Honest and respectful testing builds loyalty instead of suspicion.

Finally, let’s think about how to create a learning culture around unbiased testing.

Building a Learning Organization Through Unbiased Testing

When everyone values questions and data, your store grows stronger with each test. By documenting results, sharing them openly, and celebrating lessons (both positive and negative), you transform your business into a place of constant improvement. That’s the true power of unbiased testing.

You’ve reached the end of our exploration. Now let’s look at the sources that inspired these ideas, and then we’ll share a short reminder about Growth Suite.

References

- GrowthBook. (2020). “Where Experimentation goes wrong.” GrowthBook Docs. https://docs.growthbook.io/using/experimentation-problems

- LinkedIn. (2024). “Overcome Confirmation Bias in E-Commerce Decision-Making.” https://www.linkedin.com/advice/0/heres-how-you-can-overcome-confirmation-bias-e-commerce-lk7ae

- Kameleoon. (2025). “A/B testing: overcoming cognitive bias!” https://www.kameleoon.com/blog/ab-testing-cognitive-bias

- GrowthRock. (n.d.). “A/B Test Hypotheses are Broken, Here’s What We’re Using Instead.” https://growthrock.co/a-b-test-hypotheses/

- Trymata. (2024). “Impact of Confirmation Bias on Usability Testing Results.” https://trymata.com/blog/confirmation-bias/

- Digital Authority. (n.d.). “Common eCommerce A/B Testing Pitfalls and How To Avoid Them.” https://www.digitalauthority.me/resources/ecommerce-ab-testing-pitfalls/

Ready to boost your Shopify store’s sales with properly optimized discount codes? Growth Suite is a Shopify app that can help you do just that. Install it with a single click and start seeing results!