Have you ever felt like your online shopping platform knows you better than your best friend? Or maybe you’ve been convinced that the algorithm suggesting movies to you has somehow tapped into your deepest preferences? What if I told you that many of these “personalized” recommendations might actually be the result of our brains tricking us into seeing patterns where none truly exist?

Welcome to the fascinating world of the clustering illusion — a cognitive bias that affects not only how we perceive recommendations but also how these recommendation systems are designed in the first place. It’s a psychological phenomenon that impacts both shoppers and the businesses trying to serve them.

In this article, you’ll discover:

- Why our brains are hardwired to see patterns everywhere (even in random data)

- How this cognitive bias affects both shoppers and recommendation algorithms

- Practical strategies for businesses to design better recommendation systems

- Ways to protect yourself from being misled by illusory patterns

Ready to see through the illusion and understand what’s really happening behind those product suggestions? Let’s dive in!

Introduction to the Clustering Illusion and Recommendation Systems

Before we explore how the clustering illusion affects product recommendations, let’s understand what we’re dealing with. In this section, we’ll define this fascinating cognitive bias, explore how recommendation systems work, and see how they intersect with our psychological tendencies.

Defining the Clustering Illusion

The clustering illusion is our brain’s tendency to see patterns in completely random data. It’s like looking at clouds and seeing shapes of animals or faces — except instead of clouds, we’re looking at shopping behaviors, product clicks, or purchase histories.

This cognitive bias has been studied since the 1970s when researchers Amos Tversky and Daniel Kahneman discovered that people consistently underestimate how likely it is for random processes to produce what looks like patterns. Our minds are naturally suspicious of randomness and prefer to find order and meaning even when there isn’t any.

The clustering illusion is related to other cognitive biases like:

- Apophenia – seeing connections between unrelated things

- Pareidolia – seeing specific patterns like faces in random stimuli (think of the “face on Mars”)

In everyday life, the clustering illusion appears when gamblers think they’re on a “hot streak,” when investors spot “trends” in stock market fluctuations, or when shoppers believe they’ve discovered a store’s discount pattern.

Product Recommendation Systems Overview

Now, let’s turn to recommendation systems — those seemingly magical algorithms that suggest products you might like. These systems have evolved dramatically over the past decades:

- Early systems (1990s) simply recommended popular items to everyone

- Collaborative filtering emerged later, suggesting products based on what similar customers purchased

- Content-based filtering recommends products with similar attributes to those you’ve liked

- Modern hybrid systems combine multiple approaches with machine learning

The business impact of these systems is enormous. Amazon attributes up to 35% of its revenue to its recommendation engine. Netflix estimates its recommendations save the company $1 billion annually by reducing subscriber churn.

As consumers, we’ve grown to expect personalized recommendations, with 91% of shoppers preferring to buy from brands that remember their preferences and provide relevant suggestions.

The Intersection of Cognitive Bias and Machine Learning

Here’s where things get really interesting: the clustering illusion doesn’t just affect how we perceive recommendations — it influences how the algorithms themselves are designed and trained.

Data scientists and algorithm developers are human too, and they bring their own pattern-seeking biases to their work. They might:

- Select features for algorithms based on patterns they think they see in the data

- Misinterpret random correlations as meaningful insights

- Design systems that reinforce existing patterns rather than discovering new ones

This creates a feedback loop: algorithms detect patterns (some real, some illusory), users respond to recommendations based on these patterns, and this behavior data feeds back into the algorithm, potentially reinforcing the illusion.

What started as a random correlation between products can become an artificial pattern over time simply because the recommendation system kept suggesting them together.

Fascinating, isn’t it? Our brains love patterns so much that we’ve built this tendency right into our technology. But what’s happening inside our minds to create this illusion in the first place? Let’s explore the psychological foundations that make us so susceptible to seeing meaning in randomness.

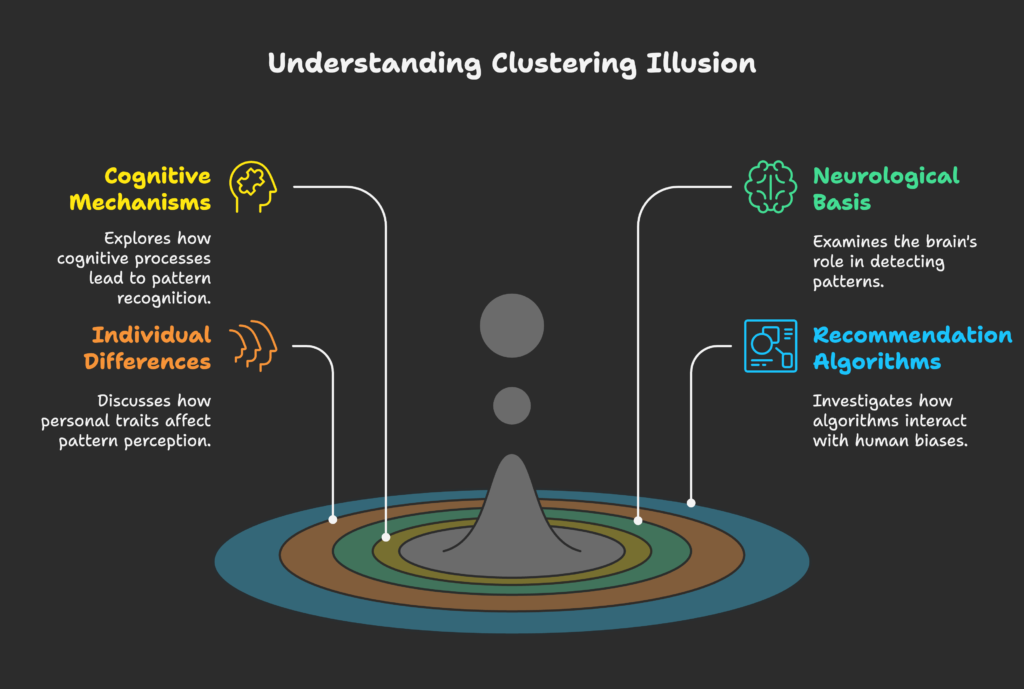

Psychological Foundations of the Clustering Illusion

Why are humans so prone to seeing patterns everywhere? In this section, we’ll explore the cognitive mechanisms, neurological basis, and individual differences that make us susceptible to the clustering illusion when interacting with product recommendations.

Cognitive Mechanisms Behind Pattern Recognition

Our brains are fundamentally pattern-recognition machines. From an evolutionary standpoint, this makes perfect sense — the ability to quickly recognize patterns helped our ancestors identify predators, find food, and survive in complex environments.

Several cognitive mechanisms contribute to our pattern-seeking tendencies:

- The need for meaning and structure: Humans are uncomfortable with randomness and prefer explanations over uncertainty. When we shop online and receive recommendations, we instinctively look for the “why” behind the suggestions.

- The representativeness heuristic: We judge the probability of an event by how similar it appears to our mental prototype. If recommendations match our self-image, we’re more likely to believe they’re accurately personalized.

- Underestimation of randomness: We consistently underestimate how “streaky” truly random processes can be. A series of spot-on recommendations might be coincidental, but we’ll attribute it to algorithm brilliance.

- Selective attention and confirmation bias: We notice and remember the recommendations that confirm our existing beliefs while ignoring those that don’t fit the pattern.

Neurological Basis for Pattern Detection

Our pattern-seeking behavior isn’t just psychological — it’s hardwired into our neural circuitry:

- The prefrontal cortex and parietal cortex work together to identify and interpret patterns

- The hippocampus helps us recognize familiar patterns by comparing current experiences with stored memories

- Our brains release dopamine when we recognize patterns, creating a pleasurable reward sensation

This neurological reward system explains why we get a small thrill when we feel like we’ve “figured out” the recommendation system. It’s the same pleasure circuit that makes games and puzzles enjoyable — and it can make us overconfident in our pattern recognition.

Individual Differences in Susceptibility

Not everyone falls for the clustering illusion to the same degree. Various factors influence our susceptibility:

- Personality traits: People who score higher on measures of “need for closure” or lower on “tolerance for ambiguity” tend to see more patterns in random data

- Statistical literacy: Those with training in statistics or probability are better equipped to distinguish between genuine patterns and random fluctuations

- Cultural background: Some cultures emphasize finding connections and meaning more than others, potentially increasing susceptibility

- Expertise level: Experts in a domain can sometimes see legitimate patterns that novices miss, but they can also be susceptible to seeing illusory patterns in their area of expertise

These individual differences help explain why some shoppers are more critical of product recommendations while others enthusiastically embrace them as perfectly tailored.

Now that we understand the psychological foundations, let’s look at how machines attempt to find genuine patterns in our shopping behavior. Are recommendation algorithms better than humans at avoiding the clustering illusion, or do they amplify our biases? Let’s find out in the next section.

Machine Learning Approaches to Pattern Recognition

How do recommendation systems actually work to identify patterns in our shopping behavior? This section breaks down the technical approaches that power the product suggestions we see every day, and explores how these systems validate the patterns they find.

Clustering Algorithms in Recommendation Systems

Several types of clustering algorithms are commonly used in recommendation systems, each with its own approach to grouping similar products or customers:

- K-means clustering groups customers with similar purchase patterns, allowing businesses to target recommendations based on which customer segment you most resemble

- Hierarchical clustering builds tree-like structures of product relationships, helping identify natural product categories and subcategories

- Density-based clustering (like DBSCAN) identifies unusual shopping patterns that may represent unique preference groups or potential fraud

- Gaussian Mixture Models handle overlapping preference groups, recognizing that customers often belong to multiple interest categories simultaneously

For example, an online clothing retailer might use K-means clustering to identify customer segments like “trend-focused millennials,” “price-sensitive parents,” and “quality-oriented professionals” — then target recommendations accordingly.

Pattern Detection Methodologies

Beyond clustering, recommendation systems employ various approaches to pattern detection:

- Supervised learning uses labeled data (like “purchased” vs. “not purchased”) to train models that predict future preferences

- Unsupervised learning discovers hidden structures in shopping data without predefined categories

- Feature extraction identifies the most important attributes of products and customer behavior

- Dimensionality reduction techniques like Principal Component Analysis (PCA) simplify complex shopping data while preserving meaningful patterns

The challenge for these systems is determining which patterns are meaningful signals and which are just noise. This is where even sophisticated algorithms can fall prey to the clustering illusion.

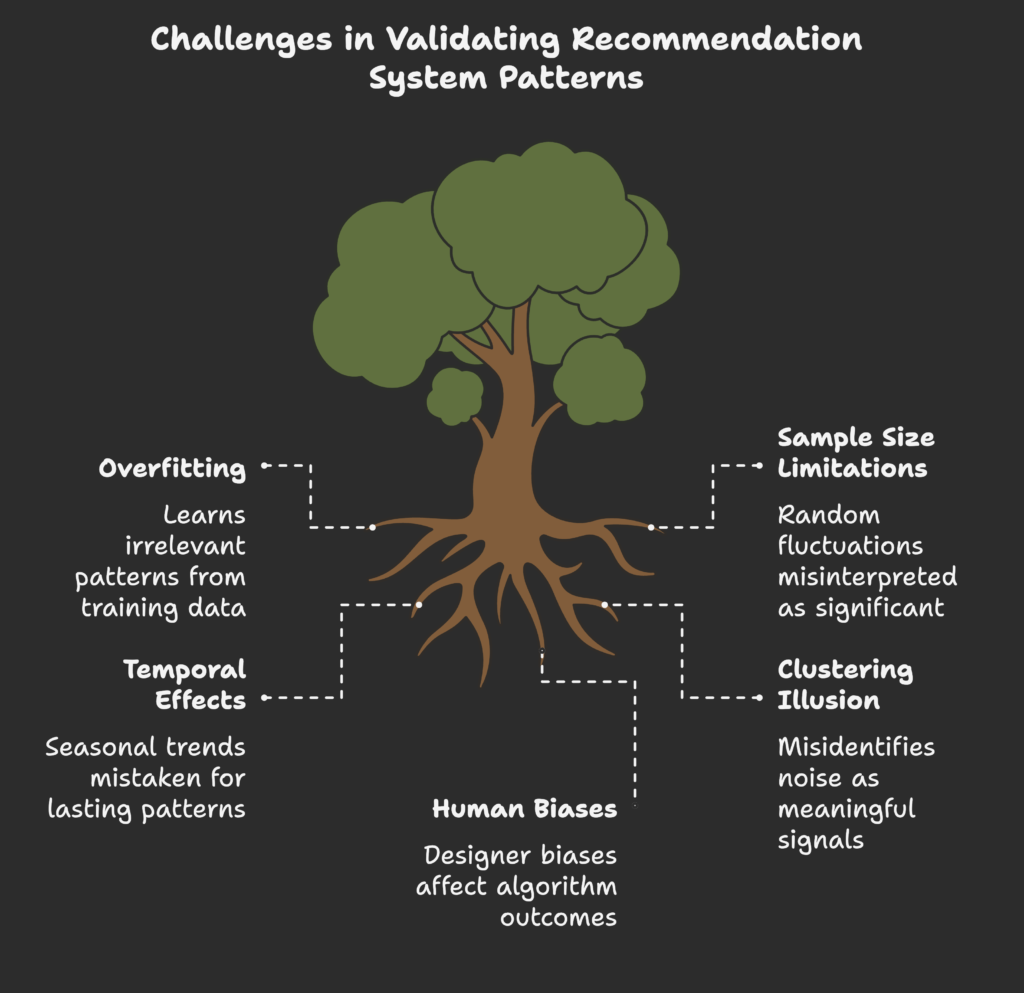

Evaluation Metrics and Validation Challenges

How do we know if a recommendation system is identifying real patterns or illusory ones? Several evaluation approaches are used:

- Statistical significance testing helps determine if observed patterns are unlikely to occur by random chance

- Cross-validation tests patterns on different subsets of data to ensure they hold up consistently

- A/B testing compares recommendation strategies with real users to measure which produces better results

- Precision and recall metrics balance finding all relevant recommendations against avoiding irrelevant ones

Despite these validation techniques, recommendation systems still face challenges in avoiding illusory patterns:

- Overfitting occurs when an algorithm learns patterns that are specific to its training data but don’t generalize to new situations

- Sample size limitations can make random fluctuations appear significant

- Temporal effects may create apparent patterns that are actually just seasonal or temporary trends

Even with advanced technology, machine learning systems can still fall victim to the same pattern-seeking tendencies that affect humans. In fact, the people who design these algorithms bring their own biases to the table. How do these human biases influence recommendation systems? Let’s examine that in our next section.

The Clustering Illusion in Recommendation Algorithm Design

While algorithms may seem objective, they’re created by humans who bring their own biases to the design process. This section explores how the clustering illusion affects algorithm development, data collection, and creates self-reinforcing feedback loops in recommendation systems.

Algorithm Designer Biases

The people creating recommendation algorithms are just as susceptible to the clustering illusion as anyone else. Here’s how their biases can influence system design:

- Confirmation bias leads developers to notice patterns that confirm their hypotheses and overlook contradictory evidence

- Focus on positive patterns means they’re more likely to incorporate features that show successful recommendations while downplaying failures

- Overemphasis on correlations without sufficient investigation of causation can embed meaningless relationships into the system

For example, a recommendation system developer might notice that customers who buy premium coffee also tend to purchase high-end water filters. They might incorporate this pattern into the algorithm without investigating whether it’s a meaningful relationship or just coincidence in their limited dataset.

Data Collection and Preprocessing Distortions

Even before analysis begins, biases in data collection can create illusory patterns:

- Sampling biases occur when the data collected isn’t representative of all customers

- Selection effects happen when only certain behaviors are tracked (like purchases but not browsing)

- Outlier handling decisions can dramatically change the patterns an algorithm detects

- Seasonal fluctuations might be mistaken for meaningful long-term patterns

If a recommendation system is trained primarily on data from holiday shopping seasons, it might detect patterns that don’t hold true during other times of the year, leading to less effective recommendations.

Feedback Loop Amplification

Perhaps the most powerful way the clustering illusion affects recommendation systems is through feedback loops:

- Self-reinforcing recommendations: When an algorithm suggests products together, customers are more likely to buy them together, which strengthens the pattern in future data

- Filter bubbles: As customers interact only with recommended products, their behavior data becomes less diverse, creating an artificial pattern

- Testing limitations: A/B tests might show short-term gains from exploiting illusory patterns without revealing long-term negative effects

Consider an online bookstore where the recommendation algorithm randomly starts suggesting cookbook B alongside cookbook A. As customers follow this recommendation, the data shows increasing “evidence” that these books belong together, strengthening the recommendation — even though the initial pattern was completely random.

These feedback loops can be particularly powerful because they transform what was initially an illusory pattern into a real one through the mechanism of the recommendation itself. The algorithm essentially creates the very reality it’s trying to detect.

With all these potential biases in algorithm design, how do customers actually perceive and interact with the recommendations they receive? Are they aware of these illusions or do they fall for them just as easily? Let’s find out in the next section.

User Perception and Response to Recommendations

How do we as consumers experience and respond to product recommendations? This section explores the psychology behind our interactions with these systems, our tendency to reinforce our own biases, and how these behaviors impact the broader market.

Consumer Psychology of Recommendations

Our relationship with recommendation systems is complex and often emotional:

- Trust development: We tend to build trust in recommendation systems gradually, with each successful recommendation increasing our confidence in the system

- Perception gap: There’s often a significant difference between how accurate we perceive recommendations to be and their actual accuracy

- Personalization illusion: We may attribute a higher degree of personalization to recommendations than actually exists

- Emotional responses: Spot-on recommendations can create delight or even slight unease (“How did they know?”), while irrelevant ones can cause frustration

Research shows that consumers often rate recommendation systems as more accurate when they receive explanations along with suggestions, even if the actual recommendations don’t change. This “transparency effect” highlights how our perceptions are influenced by factors beyond actual recommendation quality.

Confirmation Bias in User Behavior

Our own cognitive biases significantly shape how we interact with recommendations:

- Selective attention: We notice and remember recommendations that align with our self-image while overlooking others

- Attribution errors: When recommendations are accurate, we credit the system’s intelligence; when they’re wrong, we blame external factors

- Memory biases: We tend to remember “hits” (good recommendations) and forget “misses” (poor ones)

- Randomness blindness: We often fail to recognize when a good recommendation might be the result of chance rather than personalization

These biases create a situation where we might perceive a recommendation system as highly accurate even when its performance is mediocre, simply because we’re mentally filtering out the evidence that doesn’t fit our preferred narrative.

Market Impacts of Consumer Clustering Illusion

Our susceptibility to the clustering illusion has broader effects on markets:

- Limited discovery: Illusory patterns can prevent us from discovering genuinely novel products that don’t fit existing patterns

- Artificial popularity: Products can gain momentum not because they’re superior but because they’ve been caught in recommendation feedback loops

- Long-tail invisibility: Unique or niche products may struggle to break through if they don’t fit established pattern recognition

- Satisfaction paradoxes: Consumers may report high satisfaction with recommendation systems while actually experiencing a narrower range of products

For instance, a music streaming service might create artificial “hit songs” simply by including them in popular algorithmic playlists, while equally good but unlucky tracks remain undiscovered.

These market effects compound over time, potentially creating an environment where recommendations become increasingly homogenized despite the appearance of personalization.

But are these theoretical concerns or real-world problems? Let’s examine some concrete examples of the clustering illusion at work in major recommendation systems to see how these biases manifest in practice.

Case Studies of Clustering Illusion in Major Recommendation Systems

Let’s examine real-world examples of how the clustering illusion has affected major recommendation systems. These cases highlight both the challenges companies face and the innovative solutions they’ve developed to address illusory patterns.

E-commerce Recommendation Failures

Even industry giants have struggled with the clustering illusion:

- Amazon’s correlation challenges: Amazon’s recommendation system has occasionally produced amusing but inappropriate suggestions, like recommending protective equipment alongside books on sensitive topics due to coincidental co-purchases

- Product grouping anomalies: Many e-commerce platforms have experienced “runaway recommendations” where unrelated products become artificially linked due to a few random initial co-purchases

- Seasonal misinterpretations: Recommendation systems have misinterpreted holiday shopping patterns as permanent preference changes, leading to irrelevant suggestions persisting long after seasonal needs have passed

One notable example occurred when a pricing algorithm on Amazon resulted in a book about flies being listed for over $23 million due to competing algorithms reinforcing each other’s price increases — an extreme case of an algorithmic feedback loop creating an illusory value.

To recover from these issues, companies have implemented various strategies:

- Introducing randomness into recommendations to break potential feedback loops

- Using time-weighted data that gives more importance to recent behavior

- Implementing multiple validation layers before recommendations go live

Content Streaming Platform Analyses

Media streaming services provide fascinating case studies in recommendation system evolution:

- Netflix’s category evolution: Netflix has learned to balance algorithm-generated categories with human curation after discovering that purely algorithmic categories sometimes created bizarre groupings based on illusory patterns

- Spotify’s discovery challenges: Spotify has actively worked to combat the “filter bubble” problem by introducing discovery features that deliberately introduce some randomness

- “YouTube rabbit holes”: YouTube has faced criticism for recommendation algorithms that can lead viewers down increasingly narrow content paths due to feedback loop amplification

One instructive example is Netflix’s shift from a single-number rating prediction to a percentage match system, acknowledging that precise predictions created an illusion of certainty that wasn’t supported by the data’s underlying patterns.

Financial and Investment Recommendation Systems

Financial recommendation systems face particularly high stakes when dealing with the clustering illusion:

- Stock pattern recognition pitfalls: Many automated trading systems have failed by detecting “patterns” in stock movements that were actually random fluctuations

- Robo-advisor challenges: Automated investment services have had to carefully design their systems to avoid mistaking random market movements for actionable patterns

- Risk assessment errors: Financial institutions have occasionally misclassified risk by seeing patterns in customer behavior data that didn’t actually predict future outcomes

Regulatory bodies have increasingly focused on this issue, with some financial authorities requiring companies to demonstrate that their recommendation algorithms can distinguish between genuine and illusory patterns before approving their use.

These real-world examples show that the clustering illusion isn’t just a theoretical concern — it’s a practical challenge that even sophisticated companies with vast resources struggle to overcome. So what can businesses do to mitigate these effects? Let’s explore some practical strategies in the next section.

Mitigation Strategies and Best Practices

How can businesses and developers reduce the impact of the clustering illusion in their recommendation systems? This section explores practical statistical approaches, algorithm design improvements, and user interface strategies that can lead to more reliable recommendations.

Statistical Approaches to Reduce False Patterns

Strong statistical foundations can help recommendation systems distinguish real patterns from illusory ones:

- Proper sample sizing: Ensuring data samples are large enough to support statistical significance before identifying patterns

- Randomization testing: Comparing detected patterns against what would be expected from purely random data

- Bootstrap techniques: Creating multiple sample datasets to test if patterns hold consistently across different data subsets

- Bayesian methods: Incorporating prior probability knowledge to assess the likelihood that an observed pattern is genuine

For example, before concluding that customers who buy product A tend to like product B, a system might run tests to determine the probability of seeing such a correlation by random chance, only establishing the relationship if the probability is sufficiently low.

Algorithm Design Improvements

Thoughtful algorithm design can help avoid reinforcing illusory patterns:

- Diversity promotion: Deliberately including some recommendations outside detected patterns to avoid feedback loops

- Explainable AI approaches: Making the reasoning behind recommendations transparent helps both developers and users assess pattern validity

- Multi-objective optimization: Balancing pattern-matching with other goals like diversity, novelty, and business objectives

- Time-sensitive evaluation: Regularly reassessing patterns to identify those that may be temporary or coincidental

For instance, a book recommendation system might deliberately include occasional suggestions from genres the reader hasn’t explored, both to provide discovery opportunities and to test whether the user’s apparent genre preferences are genuine or just artifacts of limited past exposure.

User Education and Interface Design

How recommendations are presented to users can significantly impact the clustering illusion:

- Confidence indicators: Communicating the system’s confidence level in each recommendation helps users appropriately weight suggestions

- Pattern visualization: Showing users why items are recommended can help them evaluate the relevance of detected patterns

- Multiple recommendation pathways: Offering different ways to discover products beyond “customers also bought” patterns

- User control: Giving customers options to adjust recommendation parameters or provide explicit feedback

Amazon’s explanation feature, which tells you whether a recommendation is based on your purchase history, browsing history, or items you’ve rated, is a simple example of transparency that helps users assess recommendation relevance.

These mitigation strategies don’t just improve technical accuracy — they also build user trust and address ethical concerns about manipulation through recommendation systems. Speaking of ethics, what responsibilities do companies have when their recommendation systems might exploit cognitive biases? Let’s explore this important dimension in our next section.

Ethical Considerations and Consumer Protection

As recommendation systems become more powerful and pervasive, ethical questions arise about transparency, fairness, and protection for vulnerable users. This section explores the ethical dimensions of recommendation systems and the clustering illusion.

Transparency and Disclosure Requirements

What ethical obligations do companies have regarding their recommendation systems?

- Algorithmic transparency: Should companies be required to disclose how their recommendation systems work?

- Confidence disclosure: Is there an ethical obligation to communicate uncertainty in recommendations?

- Dark pattern avoidance: How can companies prevent recommendation systems from manipulating users through cognitive biases?

- Self-regulation initiatives: What industry standards are emerging for ethical recommendation practices?

Some companies have begun proactively addressing these concerns. For example, Netflix’s shift to percentage matching rather than predicted star ratings represents a more honest acknowledgment of the uncertainty in recommendation predictions.

However, full transparency remains rare, as most companies consider their recommendation algorithms to be proprietary competitive advantages.

Vulnerable Populations and Special Considerations

Not all users are equally equipped to recognize and resist the clustering illusion:

- Age-related factors: Research suggests older adults and very young users may be more susceptible to certain types of pattern illusions

- Neurodiversity considerations: People with different cognitive styles may perceive patterns differently or be more/less susceptible to the clustering illusion

- Cultural context: Pattern recognition has cultural dimensions that recommendation systems may not account for

- Economic implications: How do recommendation systems affect users with different socioeconomic resources?

For instance, recommendation systems that push higher-priced alternatives based on illusory patterns of “what similar customers bought” could disproportionately impact users with limited financial resources who may not recognize the pattern as potentially misleading.

Regulatory Frameworks and Policy Directions

How are governments and regulatory bodies approaching these issues?

- Current regulations: The EU’s GDPR and Digital Services Act contain provisions related to algorithmic transparency and user control

- Emerging policy approaches: Various jurisdictions are exploring specific regulations for recommendation systems

- Industry standards: What best practices are becoming standard in the industry?

- Consumer advocacy: How are consumer groups pushing for better protection against manipulative recommendations?

Regulators are increasingly concerned about recommendation systems that exploit cognitive biases like the clustering illusion, particularly when they might lead to harmful outcomes such as promoting addictive products or behaviors.

As this regulatory landscape evolves, businesses that proactively address ethical concerns may gain consumer trust and avoid future compliance headaches. But what does the future hold for recommendation systems and our understanding of the clustering illusion? Let’s explore emerging research directions in our next section.

Future Research Directions

The field of recommendation systems and our understanding of the clustering illusion continue to evolve rapidly. This section explores the cutting-edge research that will shape how we understand and address these challenges in the future.

Advanced Statistical Methods for Pattern Validation

Researchers are developing sophisticated new approaches to distinguish genuine patterns from illusory ones:

- New randomness testing frameworks that can better identify when perceived patterns are likely just statistical noise

- Cross-domain validation techniques that test if patterns hold true across different contexts and data types

- Temporal stability requirements that evaluate pattern consistency over time before accepting them as genuine

- Hybrid human-AI validation systems that combine human intuition with computational power to assess pattern validity

One promising approach involves using generative adversarial networks (GANs) to create synthetic “random” data with similar properties to the real data, then comparing detected patterns between the real and synthetic datasets to identify which patterns are likely meaningful versus coincidental.

Interdisciplinary Approaches

Some of the most exciting advances are happening at the intersection of different fields:

- Cognitive science and computer science collaboration is creating recommendation systems that account for known cognitive biases

- Anthropological perspectives are helping systems understand how cultural factors influence pattern perception

- Neuroscience insights are informing algorithm design by understanding how the brain processes patterns

- Economic approaches are helping quantify the value of accurate versus diverse recommendations

For example, researchers are using eye-tracking and neuroimaging to understand how consumers actually process recommendations, revealing that the sequence and visual presentation of recommendations significantly impact pattern perception.

Emerging Technologies and Challenges

New technologies are creating both opportunities and challenges for addressing the clustering illusion:

- Quantum computing applications may offer new ways to identify complex but genuine patterns in massive datasets

- Federated learning could enable pattern detection across organizations without compromising user privacy

- Multimodal recommendation systems that work across text, images, and other data types face unique pattern validation challenges

- Privacy-preserving methods must balance personalization with data protection

The rapid development of these technologies suggests that our understanding of the clustering illusion and our ability to mitigate it in recommendation systems will continue to evolve significantly in the coming years.

With all these fascinating developments on the horizon, what practical takeaways can different stakeholders apply today? Let’s conclude with actionable insights for businesses, developers, and consumers.

Conclusion and Practical Implications

We’ve covered a lot of ground exploring the clustering illusion and its impact on recommendation systems. Now let’s bring it all together with practical takeaways for different stakeholders, thoughts on balancing innovation with caution, and a look at the future of human-algorithm collaboration.

Key Takeaways for Different Stakeholders

For algorithm designers and developers:

- Implement rigorous statistical validation before accepting detected patterns

- Build diversity and exploration mechanisms into recommendation systems

- Use A/B testing to validate that recommendations actually improve user experience

- Remember that your own perception is subject to the clustering illusion when interpreting results

For business decision-makers:

- Balance the short-term gains of exploiting apparent patterns with long-term customer satisfaction

- Invest in recommendation quality assessment beyond simple click-through metrics

- Consider the ethical implications of your recommendation strategies

- Use transparency as a competitive advantage rather than a vulnerability

For consumers:

- Recognize that recommendations are suggestions, not perfect predictions

- Occasionally explore beyond recommended products to avoid narrowing your preferences

- Be aware of your own tendency to see meaningful patterns in what might be coincidental recommendations

- Provide explicit feedback to improve recommendation quality

Balancing Innovation with Caution

Finding the sweet spot between leveraging patterns and avoiding illusions is key:

- Value genuine patterns while maintaining appropriate skepticism about their validity

- Build systems that can learn from and correct pattern-detection errors over time

- Layer multiple validation approaches rather than relying on a single method to confirm patterns

- Design for transparency that helps both developers and users assess pattern quality

This balanced approach can help companies create recommendation systems that genuinely serve customer needs while avoiding the pitfalls of the clustering illusion.

The Future of Human-Algorithm Collaboration

The most promising future for recommendation systems likely involves thoughtful collaboration between humans and algorithms:

- Complementary strengths: Algorithms excel at processing vast amounts of data, while humans can provide intuitive assessment of pattern plausibility

- Bias-aware systems that incorporate knowledge of cognitive biases into their design

- Education initiatives that improve statistical literacy among both developers and users

- Ethical frameworks that guide the development of recommendation systems that inform without manipulating

By acknowledging our shared susceptibility to the clustering illusion, we can build systems that help us navigate the abundance of choices in the modern marketplace while respecting our autonomy and cognitive limitations.

In the end, the goal isn’t to eliminate pattern recognition — it’s one of our most valuable cognitive abilities — but to distinguish between the patterns that truly help us make better decisions and the illusions that might lead us astray.

Reminder: Shopify store owners can implement many of the recommendation best practices discussed in this article using the Growth Suite application. With advanced pattern validation, transparency features, and customizable recommendation strategies, Growth Suite helps you increase sales while avoiding the pitfalls of the clustering illusion. Try it today!

References

- Clearer Thinking. (2021). Clustering Illusion: Definition, Examples and Effects. https://www.clearerthinking.org/post/clustering-illusion-definition-examples-and-effects

- Academy 4SC. (n.d.). Clustering Illusion: See the Bigger Picture. https://academy4sc.org/video/clustering-illusion-see-the-bigger-picture/

- Pain, A. (2023). Clustering Algorithms in Machine Learning: Unveiling Patterns in Data. LinkedIn. https://www.linkedin.com/pulse/clustering-algorithms-machine-learning-unveiling-patterns-aritra-pain

- Wikipedia. (2024). Clustering illusion. https://en.wikipedia.org/wiki/Clustering_illusion

- Forbes. (2024). The Clustering Illusion: What It Is And How To Overcome It. https://www.forbes.com/sites/brycehoffman/2024/06/16/the-clustering-illusion-what-it-is-and-how-to-overcome-it/

- Stacked Marketer. (n.d.). The Clustering Illusion. https://www.stackedmarketer.com/psychology-of-marketing/the-clustering-illusion/

- PubMed. (2020). SineStream: Improving the Readability of Streamgraphs by Minimizing Sine Illusion Effects. https://pubmed.ncbi.nlm.nih.gov/33048718/

- Semantic Scholar. (2024). Research on the application of visual illusion in the field of art and design. https://www.semanticscholar.org/paper/c7a1682c8155336f1d22960b0fef50e52ec8bb5f

- Semantic Scholar. (2024). Reality or Illusion: Comparing Google Scholar and Scopus Data for Predatory Journals. https://www.semanticscholar.org/paper/6f3b2e221b89fcf2350d98a70464b65ec5f03dc0

- Semantic Scholar. (2018). Illusionary Correlation, False Causation, and Clustering Illusion. https://www.semanticscholar.org/paper/3b7570f7ea1a6e6bbdf9d220daf291de9da96072

- PubMed. (2020). Phonological but not semantic influences on the speech-to-song illusion. https://pubmed.ncbi.nlm.nih.gov/33089742/

- Kahneman, D., & Tversky, A. (1972). Subjective probability: A judgment of representativeness. Cognitive Psychology, 3(3), 430-454.